Origami Studio by Facebook has been around for a little while now. It’s a mechanism for prototyping apps which almost requires you to code the entire thing; but the key difference is that it’s simple enough for a designer to go ahead and use. Origami used to be a plugin for Xcode’s quartz composer, a now-defunct way to develop apps using nodes or ‘patches’ which could take an input, perform an operation, and push an output onto the next node.

The programme is now standalone, and while somewhat simple, it opens up realms of possibility for those with less of an inkling in regard to manual code. Origami has gone through some iterations over the years, really fleshing out its best features toward the start of 2019. Here at TD we’ve always had this on our radar, but we’ve been playing with it in a little more detail recently. Turns out it’s got some very handy tricks up its sleeve:

Internet-powered Prototypes

A common quip with UI prototypes when testing is that they simply don’t carry ‘real’ data. This makes things more difficult when performing user tests, and often takes them out of the core experience on offer when they have to be told: “Oh this part doesn’t actually do anything, it’s just there to look pretty.” or “You can only click on the first four there, sorry!” Adding network requests to Origami is incredibly simple, and means you can pull real-time data from just about any endpoint you like, making the prototype’s content options endless. Here we’ve got a simple-looking country code detector for entering a phone number. Based on whichever format the user puts in, this prototype can recognise the phone number’s home country. It can then format the number correctly for use in a production backend if need be.

This is an incredibly simple use of the network requests feature in a microinteraction. The patches shown demonstrate how this one works. It looks a little complicated at the onset – much of this definitely due to my poor architecture – but when considering each operation is caused by a single patch, it begins to make sense very quickly. The concept as a whole provides almost endless room for tinkering. For example, you could use the same network request feature to pull data from Google Places API based on the device’s IP address to build a ‘fake GPS’ in-prototype. Communities online have been able to populate weather apps with real weather; bring live hotel prices into booking app prototypes; and even link prototypes up to a Raspberry Pi to coordinate real-world actions using this feature. (You can see some of the things people have gotten up to at: https://www.facebook.com/groups/origami.community).

Logic and Storage

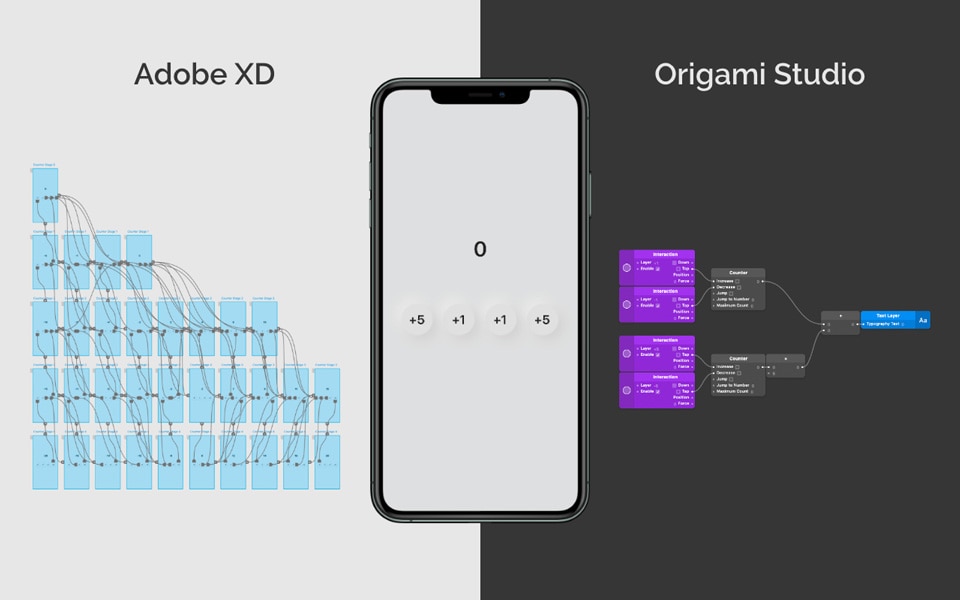

Something commonly missed in prototypes is the addition of logic. Programmes such as Adobe XD, Invision or Sketch can’t store memory in their prototypes. To make things work, we instead often have to create ‘memory states’ which mimic storing of information. This is where tapping certain elements on screen brings up an entirely different screen with a very slight component change. XD’s addition of component states has improved upon this massively; but it’s still not quite the same as real logic. To demonstrate, we have two versions of a simple counter – built both in Origami Studio and in Adobe XD. Due to the artboard-based architecture of most prototyping platforms, this becomes incredibly convoluted very quickly even with a four-tap-only prototype. As much as this isn’t necessarily what hybrid design/prototyping tools are meant for, logic allows for what some may call a ‘super-high-fidelity’ prototype.

Device Motion and Camera Access

The ability to access a device’s more ‘intimate’ features such as motion; extra sensors such as those used for 3D Touch; or the device’s camera is something that many rapid prototypes need when iterating. Most prototyping programmes currently available can’t grant this level of access, so it’s super handy to have when needed.

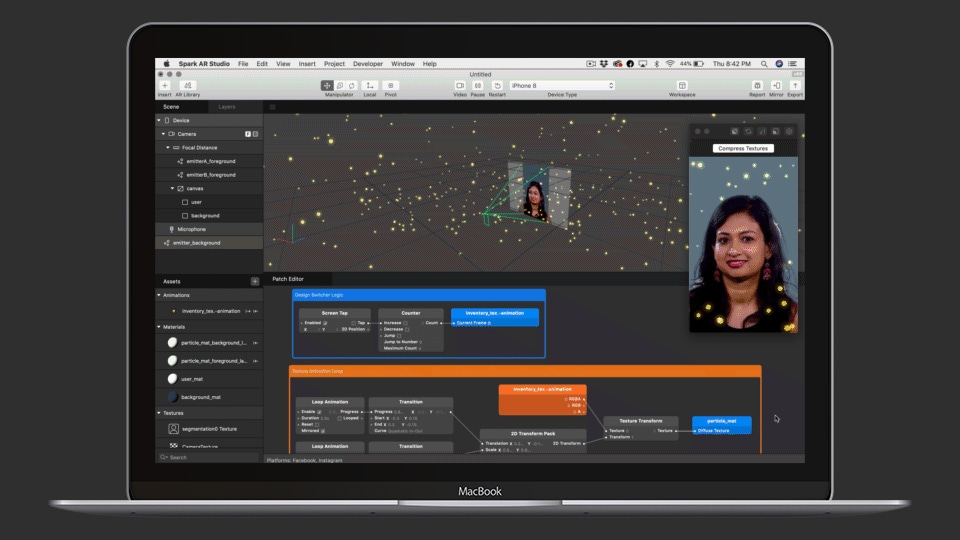

If you combine the camera and accelerometer facilities, and an awful lot of clever maths, you can start to demo what AR experiences might look like. Best of all, Facebook extended their previous camera effects platform to actually include a much better version of all of this in a standalone AR prototyping package. No scary maths involved! You can find this at https://sparkar.facebook.com.

What should any of this mean for product designers?

In summary, a lot for some, not a lot for others. While these advanced features look all well and good for Origami Studio, the rapid UI design facilities found in apps like Adobe XD, Figma and Sketch are invaluable. Origami simply doesn’t have this kind of speed and facility. It likely won’t ever, primarily due to its slightly more in-detail build process. It is simply there to enable you to build prototypes quickly which wouldn’t necessarily otherwise be possible without coding them from scratch. Development of Origami Studio is still ongoing, however at slightly less a rate of pace that Spark AR is. Either way, it’ll be very interesting to see these pan out. Both a very useful set of tools for the arsenal.

Article by Jack Howell, UI/UX Designer at The Distance