App Clips are a new concept that Apple have recently introduced us to as part of their iOS14 unveiling at WWDC this year. At surface level, it looks like a carbon copy of Android’s Instant Apps feature, but it’s actually far more than that, and it holds some interesting advantages over the Android solution. It unusually gives us quite a strong insight into where Apple wants to go with its whole mobile product line.

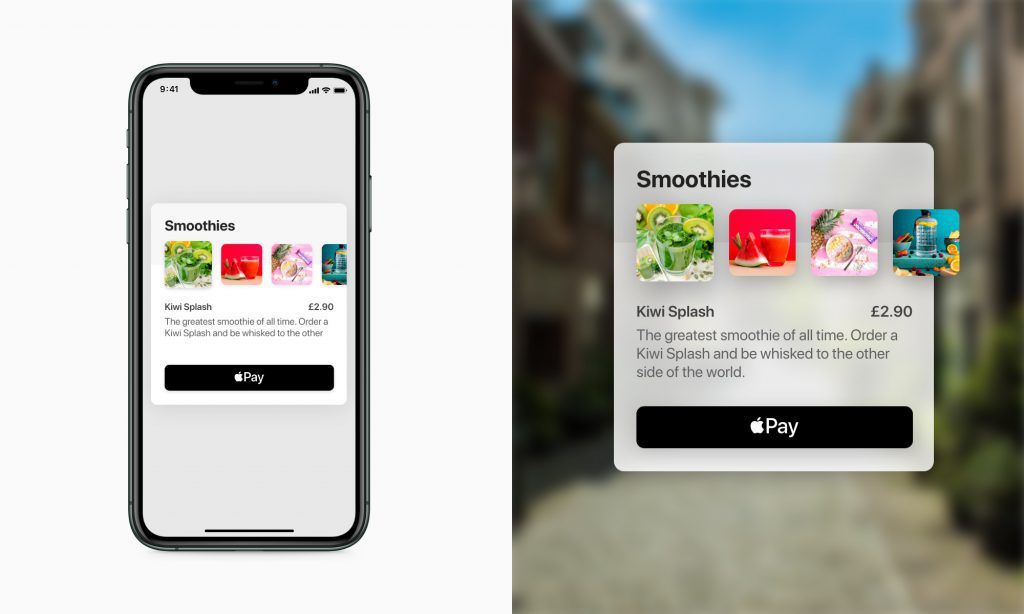

For those who haven’t seen, App Clips are a tiny, simplistic ‘clip’ of an iOS app which loads on demand. They represent a single flow in an app, with encouragement to download the full app to unlock additional features. You can access these clips in a plethora of ways, via NFC tags, weblinks, QR codes, or Apple’s own NFC/QR-combination – the “App Clip code”. For more information, Apple’s own explainer is relatively helpful:

https://www.cnet.com/videos/share/app-clips-get-you-the-apps-you-need-right-when-you-need-them/

Android users have been able to taste apps from the Google Play store without the commitment for years. A similar feature was released by Google way back in 2017. The only real difference is in the emphasis placed on how it should be used. This is where the key factor in Apple’s ability to create success out of this feature comes in. It’s a multi-pronged approach, lodged primarily in AR, and it actually starts with SwiftUI.

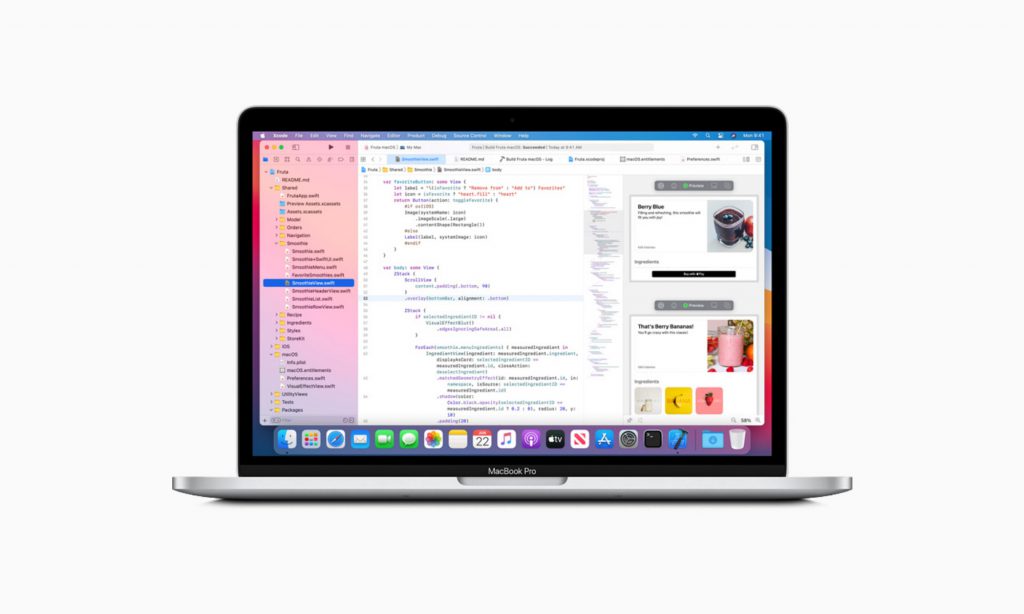

Programming for cross-platform with SwiftUI

SwiftUI was introduced last year as part of Apple’s push into making coding a little easier and more “declarative” as they like to say. It’s an incredibly flexible way to layout applications, and this year’s focus has been on creating components with SwiftUI on a canvas. By having developers create components in such a robust and flexible manner, it means that shifting these components between platforms is almost instant. Due to this, you can now use SwiftUI to build Apple Watch complications, new iOS14 widgets, and more.

As much as component-based app development has always been around, SwiftUI makes it easier than ever before; adding extras like visual editors, and the ability to automatically insert responsive stacks and elements from Apple’s own object library. Best of all, it’s all native code, and it compiles to be really small. This way, when it comes to App Clips, all you have to do is check off the parts of the app you want to work as a clip, play with Plists a little, and you’re done. The 10mb size limit on App Clips should more than cover this in most use cases. Size limits are very important in this arena, since they are a major pain point for Android instant apps. Large amounts of refactoring often needs to be done to take an app under Android’s 4MB instant app size limit.

The App Clip approach

The second prong here is the way Apple wants us to use App Clips. The company is due to produce new “App Clip codes” for us to order later this year. The codes combine QR codes and NFC tags to give users the choice on how they want to launch their App Clip.

Using NFC makes perfect sense for accessing App Clips in physical situations. They’re just about contactless, and don’t require any additional taps to use. Simply hold your phone close toI the tag and your App Clip can launch. Adding QR codes to the mix is a little more curious. At the minute you have to launch your camera app to be able to scan them, which is actually quite poor on UX. What they are great for however, is AR. It would be no coincidence after all, that Apple has had a decent number of members from it’s AR team working on this project.

With developers now creating modular components for their apps designed to work on all platforms, building thousands of new bite-sized experiences; and with a plethora of Apple-proprietary App Clip code stickers soon to appear on every shop door, cinema kiosk, on scooters and taxis, on your smoothies and clothes everywhere, all you need now is a convenient way to take these all in. Enter: the much anticipated, Apple Glasses.

Apple Glasses

Rumour has it that the first release of this product will come out this year. It’s likely that holographic displays won’t be in front of our eyes quite yet, but even the ability for a camera to scan these App Clip codes to prepare content for iPhone before we open them up would be a stepping stone toward the real deal. Come future generations of Apple Glasses, the ability to view App Clips in-situ through your own eyes will be a game-changer. App Clips using SwiftUI components would more or less be able to work with AR out of the box. Simply rearrange the way they display into a familiar AROS which Apple will come out with, and you’re good to go.

The opportunity presented by App Clips and Instant apps is huge, especially when it shifts toward enabling AR experiences. In many ways, they could represent the next step in Google and Apple’s race to take AR to the mainstream. Where do you think this could go, perhaps into your latest and greatest app idea? Let us know by getting in touch at hello@thedistance.co.uk

Article by Jack Howell, UI/UX Designer at The Distance